For the past 100 years or so, as humans, we have been dreaming, thinking, writing, singing, and making movies about machines that can think, reason, and be intelligent in a similar way to ours. The story beginning with “Erewhon” published by Sam Butler in 1872, Edgar Allan Poe’s “Maelzel’s Chess Player” and the 1927 movie “Metropolis” show the idea that machines can think and reason like humans. Not by magic or fantasy. They borrowed automata from ancient Greece and Egypt, and combined the concepts of Aristotle, Ramon Luhr, Hobbes, and thousands of other philosophers.

Their concept of the human mind convinced them that all rational thinking can be expressed in algebra or logic. Later, the emergence of circuits, computers and Moore’s Law led people to continue to speculate that human-level intelligence is just around the corner. Some people call it the savior of mankind, while others portray disasters as the rise of a second intelligent entity to crush the first (humanity).

The flame of computerized artificial intelligence burned brightly several times in the 1950s, 1980s, and 2010s. Unfortunately, the two previous artificial intelligence crazes were accompanied by “artificial intelligence winter”, but they were outdated because they failed to meet expectations. This winter is often blamed on lack of computer skills, insufficient understanding of the brain, or hype and excessive speculation. In our current AI summer vacation, most AI researchers focus on increasing the depth of their neural networks using the steadily increasing available computer power. Despite the different names, neural networks are inspired by neurons in the brain and have only superficial similarities.

Some researchers believe that by adding more and more layers to these simplified convolutional systems provided by ever-increasing data, human-level general intelligence can be achieved. This is supported by the incredible things these networks can produce and will get better every year. However, although deep neural networks have produced many miracles, they are still focused on and only good at one thing. A superman Atari using AI cannot make music or think about weather patterns without humans adding these features. In addition, the quality of the input data will greatly affect the quality of the network, and the ability to make inferences is limited, resulting in disappointing results in some areas. Some people think that recurrent neural networks will never get the general intelligence and flexibility that our brains provide.

However, some researchers are trying to create something more like the brain, you guessed it, more closely mimicking the brain. Given that we are in the golden age of computer architecture, now seems to be the time to create new hardware. This type of hardware is called neuromorphic hardware.

What is neuromorphic computing?

Neuromorphology is a peculiar term for any software or hardware that attempts to imitate or simulate the brain. Although we still don’t know much about the brain, we have made some remarkable progress in the past few years.A generally accepted theory is Columnar hypothesis, Which indicates that the neocortex (widely regarded as the place where decisions are made and information is processed) is formed by millions of cortical pillars or cortical modules. Other parts of the brain, such as the hippocampus, have recognizable structures that differ from other parts of the back of the brain.

Although the Recurrent Neural Network (RNN) is fully connected, the real brain is very picky about what is connected to. The common model of visual network is a hierarchical pyramid, the bottom layer extracts features, and each subsequent feature extracts more abstract features. Most of the brain circuits analyzed show a variety of hierarchies, where the connections circulate on their own. Feedback and feedforward connections are connected to multiple levels in the hierarchy. This “jumping” is the norm, not the rule, indicating that this structure may be the key to the characteristics that our brain exhibits.

This leads us to the next integration point: most neuron networks use Leak integration and trigger modelIn RNN, each node sends a signal at each time step, and the real neuron will only trigger when it reaches its membrane potential (the actual situation is more complicated than this).The biologically more accurate artificial neural network (ANN) with this characteristic is called Spike Neural Network (SNN)The integral and emission model of the leak is not as biologically accurate as other models Hindmarsh-Rose model or Hodgkin-Huxley Model. They mimic neurotransmitter chemicals and synaptic clefts. However, the computational cost is much higher.Given that neurons are not always firing, this does mean that the numbers need to be expressed as Spike train, And its value is coded as rate code, peak time or frequency code.

Where are we in progress?

Some groups have been directly simulating neurons, such as the OpenWorm project, which simulated 302 neurons in a roundworm called Caenorhabditis elegans.The current goals of many of these projects are to continue to increase the number of neurons, simulation accuracy, and Improve program efficiency. For example, in Germany, there is a propeller It is a low-level supercomputer that simulates one billion neurons in real time. The project reached 1 million cores at the end of 2018 and announced a huge grant in 2019 to fund the construction of the second generation machine (SpiNNcloud).

Many companies, governments, and universities are looking for exotic materials and technologies to make artificial neurons, such as memristors, Spin torque oscillator, and Magnetic Josephson Junction Device (MJJ). Although many of them look very promising in simulation, there is a huge gap between the 12 neurons in the simulation (or on a small development board) and the thousands or even billions needed to achieve true human-level capabilities .

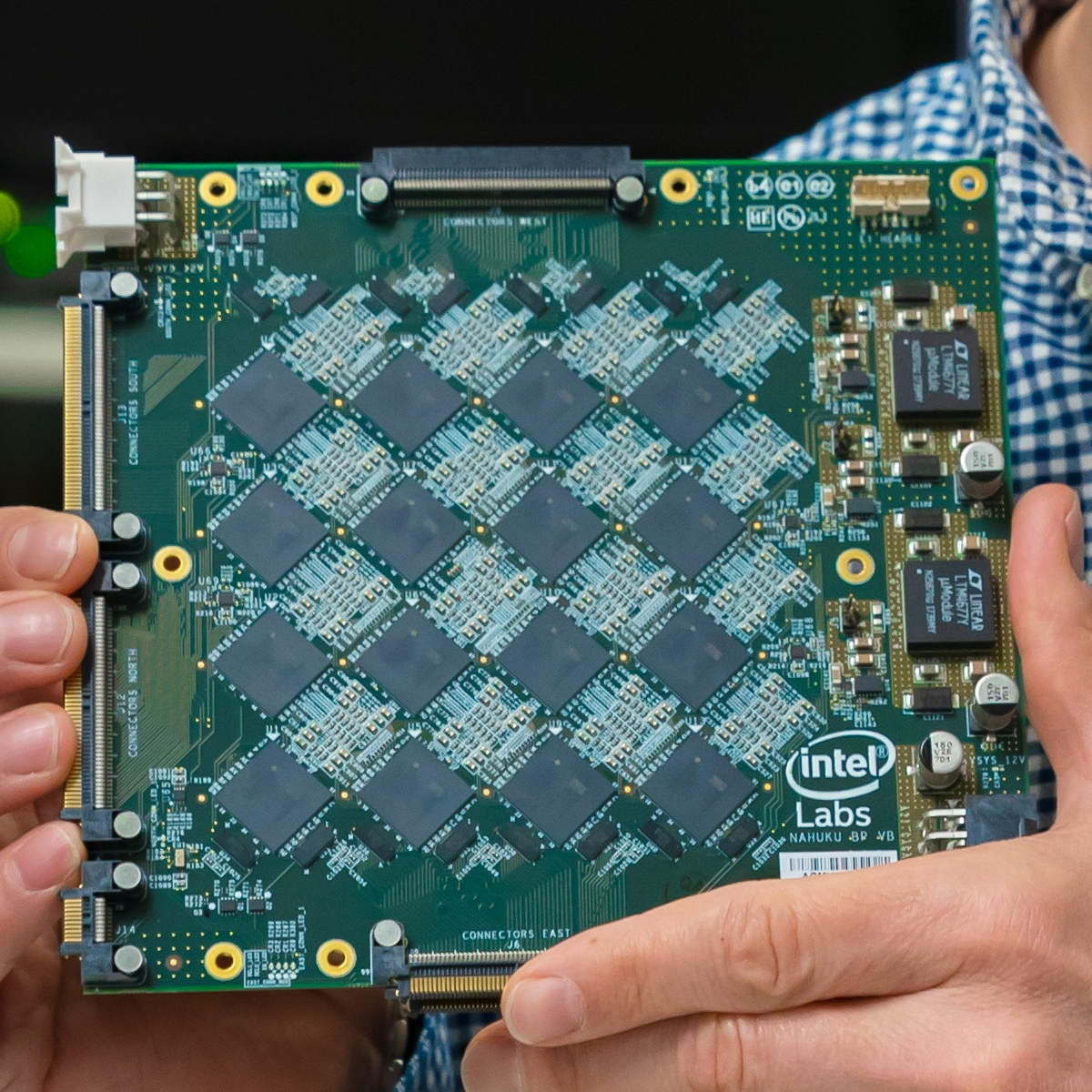

Other groups such as IBM, Intel, Brain chip, and University It is trying to use the existing CMOS technology to create a hardware-based SNN chip. One such platform from Intel, called the Loihi chip, can be integrated into a larger system.Earlier last year (2020), Intel studied Use 768 Loihi chips in the grid to achieve nearest neighbor searchThe 100 million neuron machine showed promising results, providing superior latency for systems with large pre-computed indexes, and allowing new entries to be inserted in O(1) time.

This Human Brain Project It is a large-scale project that aims to further deepen our understanding of biological neural networks. They have a wafer-level system called BrainScaleS-1, which relies on neuron simulation and mixed-signal simulation. BrainScaleS consists of 20 wafers (8 inches each), each with 200,000 simulated neurons. The follow-up system (BrainScaleS-2) is currently under development, with an estimated completion date of 2023.

This Blue Brain Project It is a research work led by Switzerland to simulate the mouse brain with biological details. Although it is not a human brain, Papers and models The articles they publish are invaluable in advancing our progress in useful neuromorphic artificial neural networks.

The consensus is that we are very, very early in trying to create something that can do meaningful work. The biggest obstacle is that we still don’t know much about how the brain connects and learns. When you start working with networks of this size, the biggest challenge is how to train them.

Do we even need neuromorphism?

It can be argued that we don’t even need neuromorphic hardware.Technology like Reverse reinforcement learning (Irish Republican Army) Allow the machine to create a reward function instead of a network. By simply observing the behavior, you can model what the behavior intends to do and recreate it with the learned reward function to ensure that the expert (the actor being observed) does the best. Further research is under way to deal with sub-optimal experts to infer what they are doing and what they are trying to do.

Many people will continue to advance the simplified network we already have with better rewards.For example, a recent IEEE article on Copy a part of the dragonfly brain with a simple three-layer neural network The methodical and informed method showed good results. Although the trained neural network does not perform as well as dragonflies in the wild, it is difficult to say whether this is because dragonflies have better flight capabilities than other insects.

Every year we see that deep learning techniques produce better and more powerful results. It seems that in one or two papers, a given field ranges from interesting to amazing to jaw-dropping. Given that we don’t have a crystal ball, who knows? Maybe if we continue to follow this path, we will stumble upon something more general that can adapt to our existing deep learning network.

What can hackers do now?

If you want to participate in neuromorphic work, many of the projects mentioned in this article are open source, and their data sets and models can be found on GitHub and other SVN distributors.There are incredible open source projects out there, such as Neurons From Yale University or NEST SNN simulator. Many people share their experiments Open source brain. You can even create your own neuromorphic hardware, such as the 2015 Hackaday Award project NeuroBytes.If you want to read more, this 2017 Neuromorphic Hardware Survey It was an incredible snapshot of the field at the time.

Although the road ahead is still long, the future of neuromorphic computing looks promising.